Is EAGLE-3 Speculative Decoding Lossless?

EAGLE-3 (NeurIPS 2025) is the current gold standard for speculative decoding, with large speedups in SGLang and vLLM. As a brief summary, EAGLE-3's draft model fuses low, middle, and high-level features from the target model, and implements a training-time test technique to simulate multi-step generation during training.

The other day, as I was looking through their codebase, I stumbled upon something a little strange.

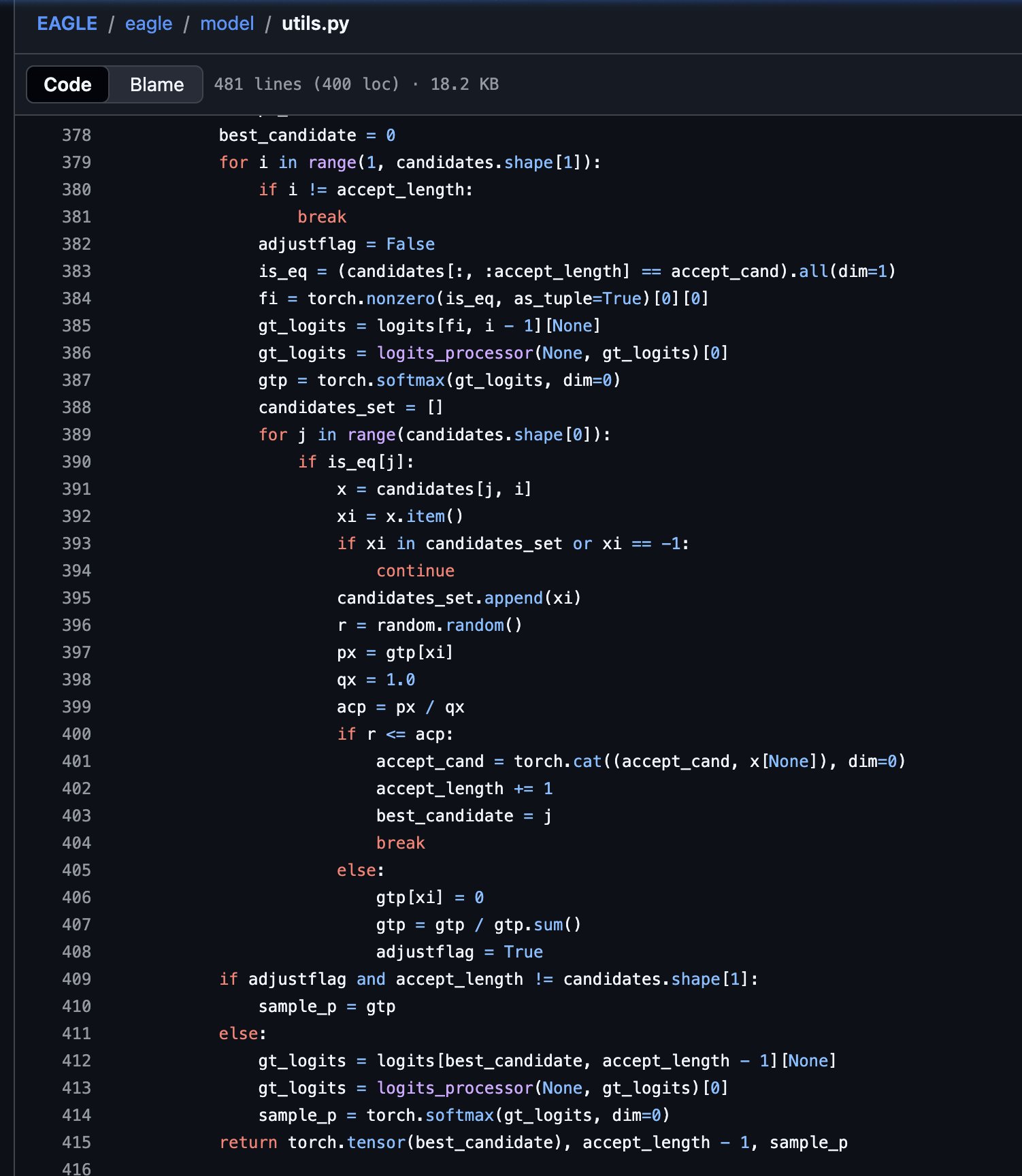

This is their non-greedy implementation. They set \(q(x)=1\) and zero out rejected tokens in the non-greedy path, which skips the residual distribution step needed for a lossless match to \(p(x)\).

The lossless rule in speculative decoding

For non-greedy SD, when a draft proposes a token \(x\), the accept-reject rule is:

If the proposal is rejected, the replacement token must be sampled from the residual distribution:

This residual can easily be derived by multiplying the acceptance probability by \(q(x)\), then subtracting this result from the target distribution \(p(x)\). If the reader is interested, please check out this paper.

In the current EAGLE-3 implementation, the non-greedy branch does not compute \(q(x)\) at all. Instead, it sets \(q(x) = 1\) and only uses the target distribution \(p(x)\) after candidate masking. So \(q(x)\) is treated as a constant, and acceptance uses \(p(x)\) directly. Additionally, rejected tokens are zeroed out rather than replaced via the residual distribution.

This essentially breaks the math. The lossless guarantee depends on the acceptance ratio and the residual distribution both using the same draft distribution \(q(x)\). If \(q(x)\) is not the true draft distribution, the accept step and the replacement step no longer cancel out in expectation, so the final distribution deviates from \(p(x)\) except in special cases (for example, greedy decoding or \(q \approx p\)).

In short, the non-greedy path appears to trade the exact lossless property for a simpler target-only heuristic. The open question is whether this produces meaningful quality regressions in real-world workloads or whether the deviation is small in practice.